Describe the puzzle of perceptual invariance despite acoustic variability – The human auditory system exhibits a remarkable ability to perceive speech sounds as stable and meaningful despite substantial acoustic variability. This phenomenon, known as perceptual invariance, presents a captivating puzzle that has intrigued researchers for decades. This article delves into the intricate mechanisms underlying perceptual invariance, exploring the interplay of auditory scene analysis, neural pathways, computational models, cross-modal integration, and implications for speech technology.

Auditory Scene Analysis: Describe The Puzzle Of Perceptual Invariance Despite Acoustic Variability

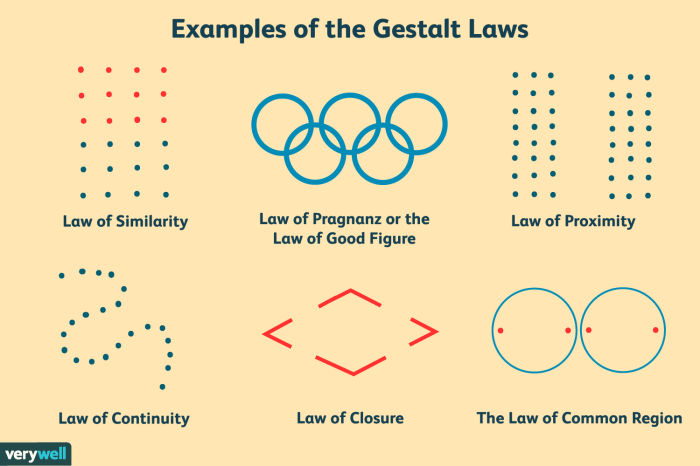

Auditory scene analysis (ASA) is the process by which the auditory system segregates and groups acoustic signals into distinct objects or streams. It plays a crucial role in perceptual invariance, allowing us to perceive speech and other sounds as stable entities despite variations in the acoustic input.

ASA involves mechanisms for separating sounds based on their spectral, temporal, and spatial cues. These cues help us identify individual sound sources, even in complex environments with overlapping sounds.

For example, in speech perception, ASA enables us to separate the speech of a target speaker from background noise or competing speakers. This process is essential for understanding speech in noisy environments and for following conversations in crowded places.

Neural Mechanisms

The neural pathways involved in processing auditory information begin with the auditory nerve, which transmits sound signals from the ear to the brainstem. From there, signals are relayed to the auditory cortex, located in the temporal lobes of the brain.

Within the auditory cortex, different regions are specialized for processing different aspects of sound, such as pitch, location, and timbre. These regions work together to create a comprehensive representation of the auditory scene.

Neural plasticity plays a significant role in the development of perceptual invariance. As we experience different acoustic environments, the neural pathways involved in ASA adapt and refine their ability to separate and group sounds.

Computational Models, Describe the puzzle of perceptual invariance despite acoustic variability

Computational models have been developed to simulate perceptual invariance. These models aim to replicate the mechanisms of ASA and to understand how the brain processes auditory information.

One type of model is the auditory scene analysis model (ASAM), which uses a bottom-up approach to separate and group sounds based on their acoustic features.

Another type of model is the auditory object recognition model (AORM), which uses a top-down approach to identify and track auditory objects based on their semantic and contextual information.

Cross-Modal Integration

Cross-modal integration refers to the process by which information from different sensory modalities, such as vision and hearing, is combined to enhance perception.

In speech perception, vision can provide additional cues that help us to separate speech from noise and to understand what is being said. For example, lip-reading can help us to understand speech in noisy environments.

Cross-modal integration also plays a role in communication in noisy environments. For example, the McGurk effect demonstrates how visual information can influence the perception of speech sounds.

Implications for Speech Technology

The principles of perceptual invariance have important implications for speech recognition systems. By incorporating these principles, speech recognition systems can be made more robust to noise and other acoustic variations.

One example of how perceptual invariance has been applied to speech recognition is the use of noise-reduction algorithms. These algorithms use techniques inspired by ASA to separate speech from background noise.

Another example is the use of speaker adaptation techniques, which allow speech recognition systems to adapt to the voice of a particular speaker, improving recognition accuracy.

Questions Often Asked

What is the role of auditory scene analysis in perceptual invariance?

Auditory scene analysis helps us segregate and group auditory objects, enabling us to focus on specific sounds amidst a complex acoustic environment.

How do neural pathways contribute to perceptual invariance?

Neural pathways in the auditory cortex and other brain regions process auditory information and contribute to the formation of stable auditory percepts.

What are the limitations of existing computational models of perceptual invariance?

Current models are limited in their ability to fully capture the complexity and flexibility of human perceptual invariance.